Chapter 6

Modern CNN Architectures

"Architectures like ResNet and DenseNet have fundamentally changed how we think about deep learning. Implementing these models in Rust opens new possibilities for performance and scalability in AI." — Geoffrey Hinton

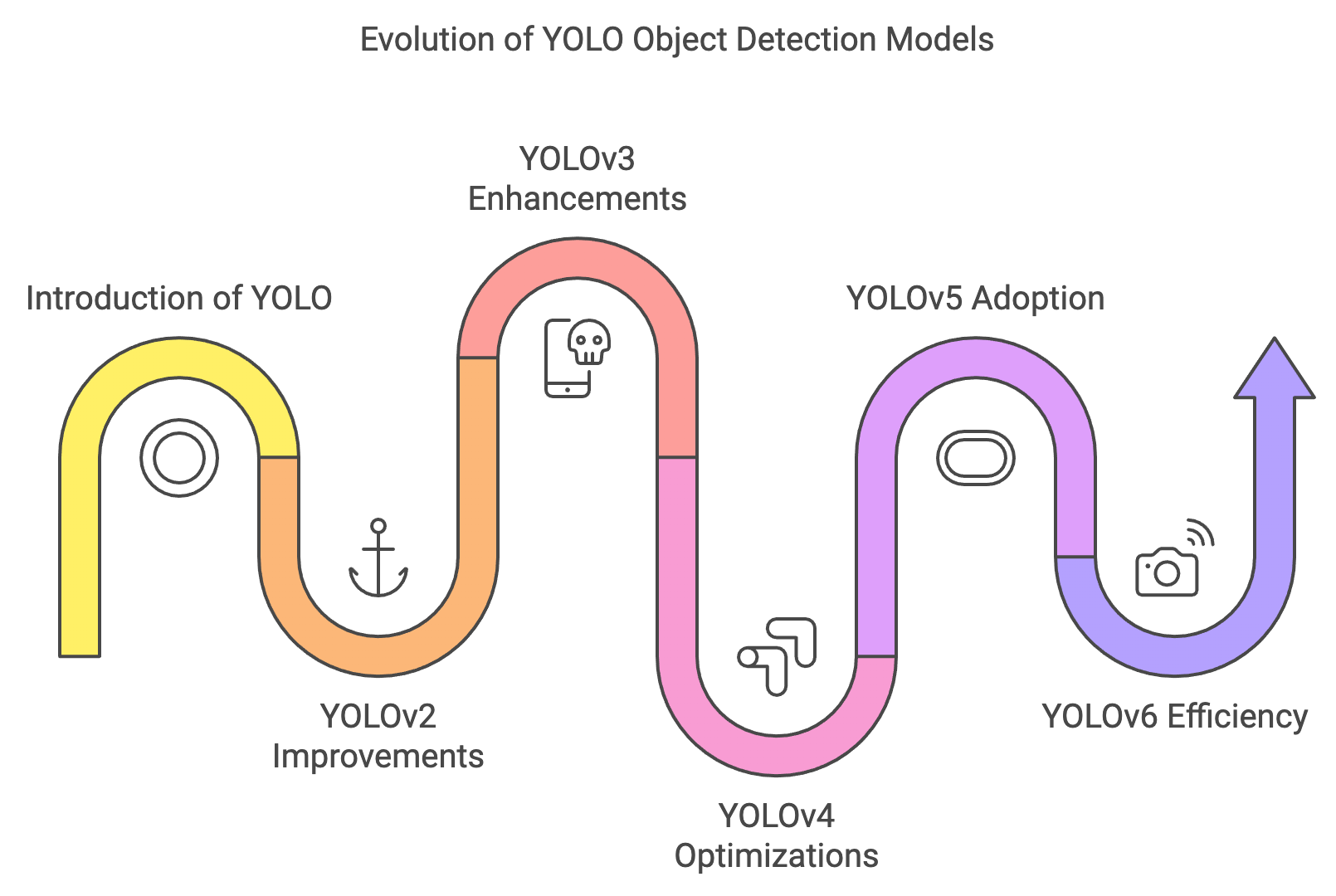

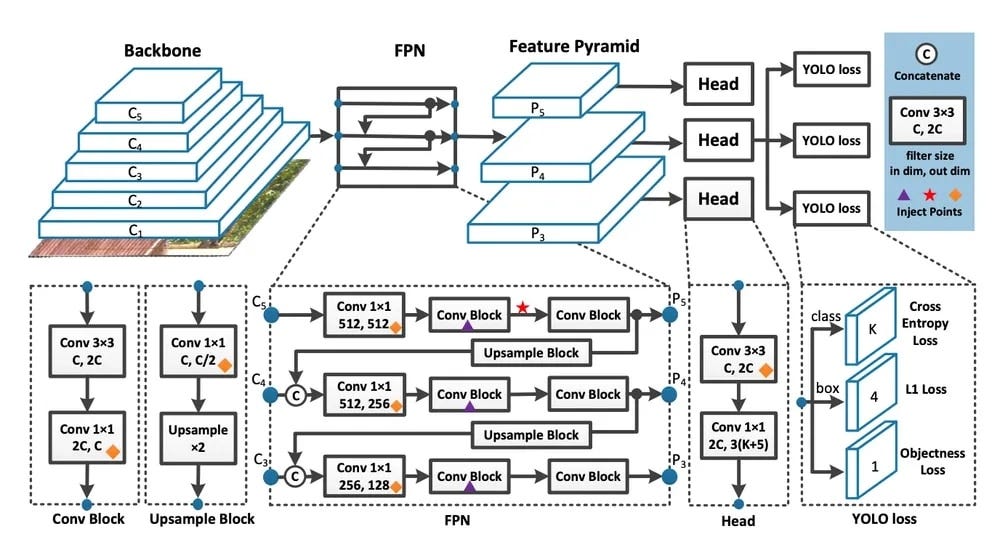

Chapter 6 of "Deep Learning via Rust" (DLVR) offers a detailed exploration of modern Convolutional Neural Networks (CNNs), tracing their evolution from foundational models to the advanced architectures shaping contemporary deep learning. The chapter introduces key networks such as VGG, ResNet, Inception, DenseNet, EfficientNet, MobileNet, and YOLO, emphasizing their innovations in addressing challenges like the vanishing gradient problem and the demand for scalable, efficient models. It delves into the principles and practical implementations of these architectures, highlighting features like residual connections, multi-scale feature extraction, dense connectivity, and efficient scaling strategies. Special attention is given to EfficientNet and MobileNet's neural architecture search (NAS) for optimizing depth, width, and resolution, as well as YOLO's real-time object detection capabilities. Through Rust-based implementations using libraries like tch-rs, readers gain hands-on experience in building, training, and fine-tuning these architectures, leveraging Rust’s performance for cutting-edge deep learning applications.

6.1. Introduction to Modern CNN Architectures

Convolutional Neural Networks (CNNs) have undergone significant evolution since their inception, progressing from simple architectures like LeNet to highly complex, powerful models such as ResNet, Inception, and EfficientNet. The primary focus of CNN evolution has been on increasing both the depth and complexity of networks to improve their ability to learn hierarchical, abstract features from data. Early CNNs like LeNet and AlexNet had only a few convolutional layers, limiting the extent of feature extraction possible. However, with advancements in computation and deep learning research, modern CNN architectures have grown deeper and wider, incorporating architectural innovations that allow them to tackle increasingly complex tasks across domains such as image classification, object detection, and segmentation.

Figure 1: The modern evolution of CNNs.

One of the critical innovations driving the development of modern CNNs has been the ability to make networks deeper while mitigating the challenges associated with training deep networks. One such innovation is the use of residual connections, introduced in ResNet. Residual connections allow information to bypass several layers, thus addressing the vanishing gradient problem, which often plagues deep networks. Mathematically, residual connections allow the network to learn an identity mapping alongside the transformation learned by the layers, formalized as $y = f(x) + x$, where $f(x)$ represents the learned transformation and $x$ is the input passed directly to the output. This allows gradients to flow more effectively during backpropagation, enabling networks to have hundreds of layers without suffering from performance degradation.

Inception modules, introduced in the Inception architecture, provide another important innovation in CNN design by enabling multi-scale feature extraction. Instead of applying a single filter size at each layer, Inception modules apply multiple filters (such as 1x1, 3x3, and 5x5) in parallel, allowing the network to capture both fine and coarse features from the same input. This multi-scale approach is mathematically represented as $y = \text{Concat}(f_{1x1}(x), f_{3x3}(x), f_{5x5}(x))$, where the results of applying different filters are concatenated into a single output tensor. This architectural design allows the network to efficiently capture a diverse range of patterns, from small details to large structures.

Another major innovation in modern CNN architectures is the focus on parameter efficiency, as demonstrated in EfficientNet. The EfficientNet family of models introduces a method called compound scaling, which scales network width, depth, and input resolution in a balanced manner. By optimizing how networks grow across these dimensions, EfficientNet achieves high accuracy while maintaining computational efficiency. This innovation is particularly valuable in resource-constrained environments, such as mobile devices, where both performance and efficiency are critical.

Several of the most impactful modern CNN architectures highlight these innovations. VGG networks, for example, demonstrated the importance of depth by stacking small convolutional filters (3x3) and increasing the number of layers to improve representational capacity. Although VGG networks are computationally expensive due to their large number of parameters, they served as a critical step in understanding how deeper networks could improve performance. ResNet, on the other hand, fundamentally changed how deep networks are built by introducing residual connections, allowing networks with hundreds of layers to be trained effectively. In contrast to VGG, ResNet reduced the number of parameters while maintaining strong performance, making it a more efficient architecture.

The Inception network, with its unique multi-scale feature extraction, brought a new level of flexibility to CNNs. By applying filters of different sizes within the same layer and concatenating their outputs, the Inception architecture allows the model to learn features across various scales without increasing the computational cost dramatically. This makes Inception particularly well-suited for tasks where features of varying sizes need to be captured, such as object detection and scene understanding.

DenseNet further built upon the concept of skip connections by introducing dense connections, where each layer receives input from all preceding layers. This design maximizes feature reuse and improves the flow of gradients, enabling DenseNet to achieve high accuracy with fewer parameters than traditional architectures. Finally, EfficientNet exemplifies the trend toward balancing network complexity with computational efficiency. By introducing a systematic approach to scaling networks across depth, width, and resolution, EfficientNet achieves state-of-the-art performance while using fewer resources, making it an ideal architecture for deployment on edge devices and mobile platforms.

Despite their numerous benefits, training very deep CNNs presents challenges that have required novel solutions. One major challenge is the vanishing gradient problem, where gradients diminish as they propagate through the layers, making it difficult to update the earlier layers of the network. Innovations such as residual connections in ResNet and dense connections in DenseNet have helped solve this problem by creating direct paths for gradients to flow backward through the network, allowing for more effective training of deep models. Another challenge is overfitting, which becomes more pronounced in deeper networks due to the increased number of parameters. Techniques like dropout, data augmentation, and weight decay have been widely adopted to mitigate overfitting and improve generalization, particularly when training on smaller datasets. Additionally, computational efficiency becomes a concern as networks grow deeper and wider. EfficientNet’s compound scaling strategy addresses this issue by optimizing the growth of network dimensions in a balanced way, ensuring that resources are used efficiently without compromising performance.

In terms of practical implementation, Rust offers a powerful and efficient platform for building and deploying CNNs, particularly in performance-critical applications. The tch-rs crate provides robust tools for defining and training modern CNN architectures in Rust. A typical implementation might involve setting up a ResNet model with residual connections or creating an Inception-like module for multi-scale feature extraction.

The following example shows how to implement a simple ResNet-inspired architecture in Rust using the tch-rs library. The following implementation uses Rust's tch library to define a ResNet-like architecture tailored for the CIFAR-10 dataset. It includes a base convolution layer, followed by three residual blocks with increasing feature maps, and concludes with a fully connected layer for classification. The code provides a compact and efficient implementation of ResNet principles for image recognition tasks.

[dependencies]

anyhow = "1.0"

tch = "0.12"

reqwest = { version = "0.11", features = ["blocking"] }

flate2 = "1.0"

tokio = { version = "1", features = ["full"] }

tar = "0.4.43"

use anyhow::{Result, Context};

use flate2::read::GzDecoder;

use reqwest;

use std::{fs, path::Path};

use tar::Archive;

use tch::{nn, nn::ModuleT, nn::OptimizerConfig, Device, Tensor, vision};

/// URL for the CIFAR-10 dataset

const CIFAR10_URL: &str = "https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz";

/// Function to download and extract the CIFAR-10 dataset.

async fn download_cifar10() -> Result<()> {

let dest = "data/cifar10";

if Path::new(dest).exists() {

println!("CIFAR-10 dataset already exists, skipping download.");

return Ok(());

}

fs::create_dir_all(dest).context("Failed to create data directory")?;

println!("Downloading CIFAR-10 dataset...");

let response = reqwest::get(CIFAR10_URL).await?;

let bytes = response.bytes().await?;

let tar_gz = GzDecoder::new(&bytes[..]);

let mut archive = Archive::new(tar_gz);

println!("Extracting CIFAR-10 dataset...");

archive.unpack("data")?;

println!("CIFAR-10 dataset downloaded and extracted successfully.");

Ok(())

}

#[tokio::main]

async fn main() -> Result<()> {

// Ensure the CIFAR-10 dataset is downloaded and extracted.

download_cifar10().await?;

// Run the ResNet model training.

run_resnet()

}

// Define the ResNet architecture.

#[derive(Debug)]

struct ResNet {

conv1: nn::Conv2D,

bn1: nn::BatchNorm,

layer1: ResidualBlock,

layer2: ResidualBlock,

layer3: ResidualBlock,

fc: nn::Linear,

}

impl ResNet {

fn new(vs: &nn::Path) -> ResNet {

let conv1 = nn::conv2d(vs, 3, 64, 3, Default::default());

let bn1 = nn::batch_norm2d(vs, 64, Default::default());

let layer1 = ResidualBlock::new(vs, 64, 64);

let layer2 = ResidualBlock::new(vs, 64, 128);

let layer3 = ResidualBlock::new(vs, 128, 256);

let fc = nn::linear(vs, 256, 10, Default::default());

ResNet { conv1, bn1, layer1, layer2, layer3, fc }

}

}

impl nn::ModuleT for ResNet {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

xs.apply(&self.conv1)

.apply_t(&self.bn1, train)

.relu()

.max_pool2d_default(2)

.apply_t(&self.layer1, train)

.apply_t(&self.layer2, train)

.apply_t(&self.layer3, train)

.adaptive_avg_pool2d(&[1, 1])

.view([-1, 256])

.apply(&self.fc)

}

}

// Define a Residual Block for ResNet.

#[derive(Debug)]

struct ResidualBlock {

conv1: nn::Conv2D,

bn1: nn::BatchNorm,

conv2: nn::Conv2D,

bn2: nn::BatchNorm,

shortcut: Option<nn::Conv2D>,

}

impl ResidualBlock {

fn new(vs: &nn::Path, in_channels: i64, out_channels: i64) -> ResidualBlock {

let conv1 = nn::conv2d(vs, in_channels, out_channels, 3, Default::default());

let bn1 = nn::batch_norm2d(vs, out_channels, Default::default());

let conv2 = nn::conv2d(vs, out_channels, out_channels, 3, Default::default());

let bn2 = nn::batch_norm2d(vs, out_channels, Default::default());

let shortcut = if in_channels != out_channels {

Some(nn::conv2d(vs, in_channels, out_channels, 1, Default::default()))

} else {

None

};

ResidualBlock { conv1, bn1, conv2, bn2, shortcut }

}

}

impl nn::ModuleT for ResidualBlock {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

let shortcut = match &self.shortcut {

Some(sc) => xs.apply(sc),

None => xs.shallow_clone(),

};

xs.apply(&self.conv1)

.apply_t(&self.bn1, train)

.relu()

.apply(&self.conv2)

.apply_t(&self.bn2, train)

+ shortcut

}

}

// Function to train and test the ResNet model on the CIFAR-10 dataset.

fn run_resnet() -> Result<()> {

// Load the CIFAR-10 dataset.

let cifar_data = vision::cifar::load_dir("data/cifar10")?;

// Use GPU if available, otherwise use CPU.

let vs = nn::VarStore::new(Device::cuda_if_available());

let net = ResNet::new(&vs.root()); // Initialize the ResNet model.

let mut opt = nn::Adam::default().build(&vs, 1e-4)?; // Set up the optimizer.

// Reshape and normalize the training and test images.

let train_images = cifar_data.train_images / 255.0;

let train_labels = cifar_data.train_labels;

let test_images = cifar_data.test_images / 255.0;

let test_labels = cifar_data.test_labels;

// Training loop for the ResNet model.

for epoch in 1..=20 {

for (bimages, blabels) in train_images.split(128, 0).into_iter().zip(train_labels.split(128, 0).into_iter()) {

let loss = net.forward_t(&bimages, true).cross_entropy_for_logits(&blabels);

opt.backward_step(&loss); // Backpropagation step.

}

// Calculate and print test accuracy at the end of each epoch.

let test_accuracy = net.batch_accuracy_for_logits(&test_images, &test_labels, vs.device(), 256);

println!("Epoch: {:4}, Test Accuracy: {:5.2}%", epoch, 100. * test_accuracy);

}

Ok(())

}

The program begins by downloading and extracting the CIFAR-10 dataset if it is not already present. The ResNet class is defined with three residual blocks and a fully connected layer for final classification, while each residual block contains convolutional layers with optional shortcuts to match dimensions. The main function initializes a single-threaded Tokio runtime to manage asynchronous tasks and calls the download_cifar10 function to ensure the dataset is available. The run_resnet function loads the CIFAR-10 dataset, preprocesses the images by normalizing their pixel values, and sets up the ResNet model and optimizer. It then iterates over multiple epochs, training the model in batches by computing the loss and backpropagating the gradients. After each epoch, the model's accuracy on the test dataset is calculated and printed, providing insights into its performance improvement over time.

In industry, modern CNN architectures such as ResNet, Inception, and EfficientNet have become the backbone of various applications, ranging from image classification to object detection and medical image analysis. These architectures are often fine-tuned on domain-specific datasets using transfer learning, where pre-trained models (trained on large datasets like ImageNet) are adapted to new tasks by updating only the final layers. Rust’s ecosystem, with libraries like tch-rs, supports loading and fine-tuning pre-trained models, allowing developers to leverage state-of-the-art CNN architectures in performance-sensitive environments, such as embedded systems, robotics, and autonomous vehicles.

In conclusion, modern CNN architectures represent the convergence of several innovations aimed at improving depth, efficiency, and performance. By building CNNs in Rust, developers can harness the power of these architectures in a highly efficient, safe, and performance-oriented programming environment, making Rust a compelling choice for deep learning in both research and industry applications.

6.2. Implementing VGG and Its Variants

The VGG architecture, introduced by Simonyan and Zisserman in 2014, is one of the most influential CNN architectures that emphasized the power of depth while maintaining simplicity. The fundamental design principle of VGG is straightforward: it uses small $3 \times 3$ filters consistently throughout the network while increasing the number of layers. This approach allows for the network to capture increasingly complex and abstract features with depth, without dramatically increasing the computational cost as seen with larger filters. The small filter size of $3 \times 3$ ensures that each convolutional layer has a limited receptive field, but by stacking many such layers, VGG can capture larger patterns and hierarchies over deeper layers.

Formally, a $3 \times 3$ convolutional filter can be represented as:

$$ y[i,j] = \sum_{m=0}^{2} \sum_{n=0}^{2} W[m,n] \cdot x[i+m,j+n] + b $$

where $x[i,j]$ is the input pixel value, $W[m,n]$ represents the filter weights, and $b$ is the bias term. This operation applies the filter over the input image, transforming it into a feature map. VGG extends this by applying multiple layers of such small filters, with each layer progressively learning more complex patterns.

The layer configuration of VGG follows a consistent pattern. It starts with several convolutional layers, followed by max pooling to reduce spatial dimensions, and ends with a series of fully connected layers for classification. VGG typically comes in variants such as VGG-16 and VGG-19, where the numbers refer to the total number of layers in the network. A typical VGG configuration for the convolutional layers would look like this: $3 \times 3$ convolutions with a stride of 1, followed by ReLU activation and max pooling.

One of the key insights behind VGG is that by stacking multiple small filters, the network can effectively replace the need for larger filters, which would otherwise have significantly more parameters and computational requirements. For example, two stacked $3 \times 3$ convolutions achieve a receptive field size equivalent to a $5 \times 5$ filter, while requiring fewer parameters. This stacking also adds more non-linearities, improving the model's ability to learn complex features.

While VGG's simplicity makes it easy to interpret and modify, it does have some trade-offs. The increase in depth, although beneficial for learning, makes the model computationally expensive in terms of memory and training time. VGG has a large number of parameters, particularly in its fully connected layers, which leads to higher memory usage. However, the simplicity of the architecture makes it easier to adapt and experiment with, which has led to many successful variants and improvements over the years.

From a conceptual perspective, the depth of VGG allows it to capture detailed and complex features from images. This depth makes VGG particularly effective on large datasets like ImageNet, where the abundance of features justifies the complexity of the network. However, there are performance trade-offs—while VGG performs exceptionally well in terms of accuracy, it is computationally heavy compared to later architectures such as ResNet and EfficientNet, which optimize for both depth and efficiency.

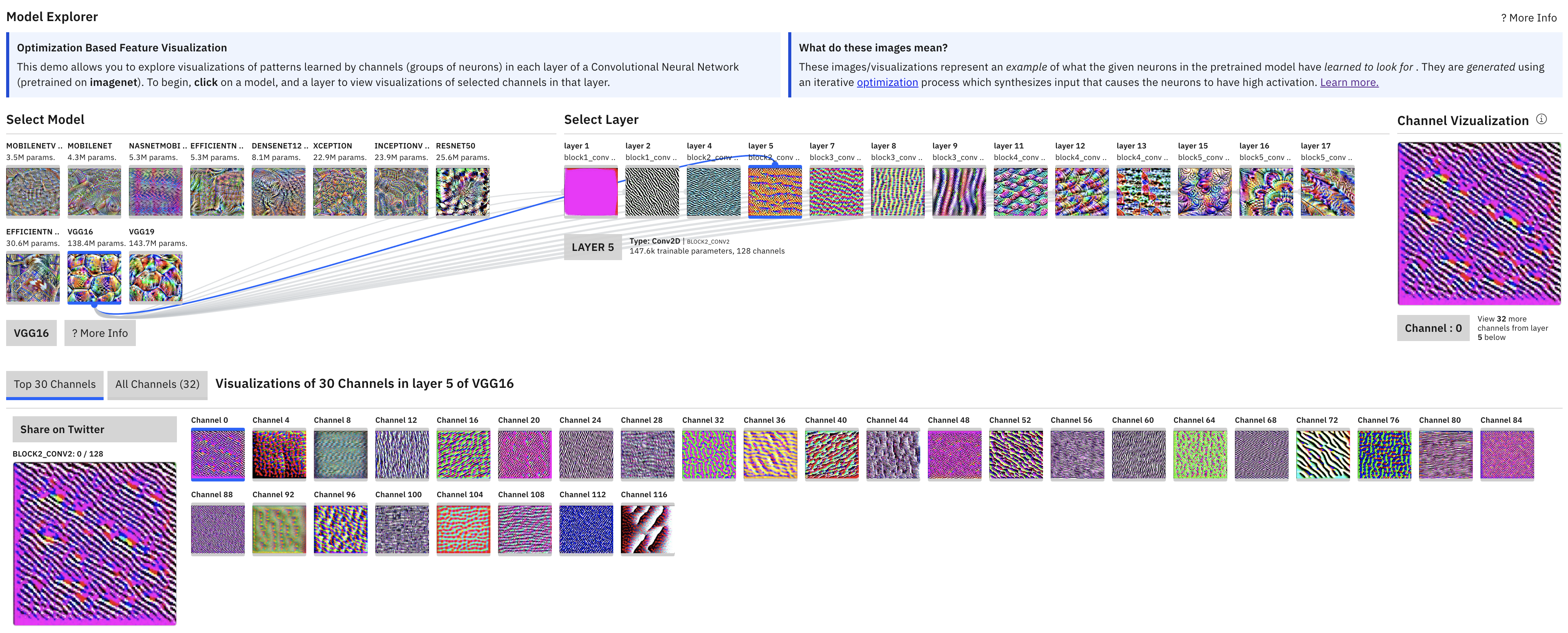

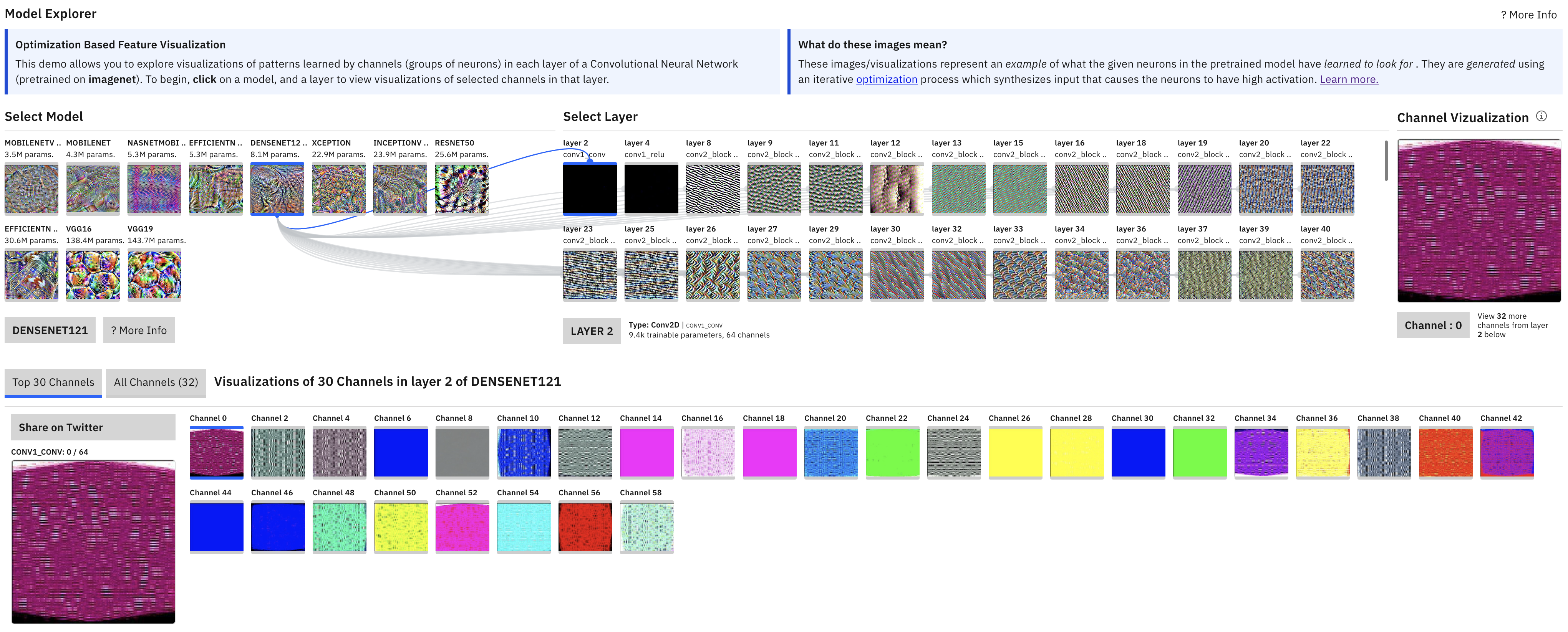

Figure 2: Model visualization of VGG16 architecture.

VGG-16 is a deep convolutional neural network architecture introduced by the Visual Geometry Group at the University of Oxford. It is renowned for its simplicity and uniform structure, consisting of sequential 3x3 convolutional layers with ReLU activations, followed by max-pooling layers and fully connected layers at the end. VGG-16 is widely used for image classification tasks, offering a balance between depth and computational efficiency.

The provided code implements the VGG-16 architecture to classify images in the CIFAR-10 dataset, a benchmark dataset consisting of 60,000 32x32 RGB images across 10 classes. It handles the download, extraction, and preprocessing of the CIFAR-10 dataset, followed by training and evaluating the VGG-16 model. The following code demonstrates how to implement a simplified version of VGG-16 in Rust:

use anyhow::{Result, Context};

use flate2::read::GzDecoder;

use reqwest;

use std::{fs, path::Path};

use tar::Archive;

use tch::{nn, nn::ModuleT, nn::OptimizerConfig, Device, Tensor, vision};

/// URL for the CIFAR-10 dataset

const CIFAR10_URL: &str = "https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz";

/// Function to download and extract the CIFAR-10 dataset.

async fn download_cifar10() -> Result<()> {

let dest = "data/cifar10";

if Path::new(&format!("{}/test_batch.bin", dest)).exists() {

println!("CIFAR-10 dataset already exists, skipping download.");

return Ok(());

}

fs::create_dir_all(dest).context("Failed to create data directory")?;

println!("Downloading CIFAR-10 dataset...");

let response = reqwest::get(CIFAR10_URL).await?;

let bytes = response.bytes().await?;

let tar_gz = GzDecoder::new(&bytes[..]);

let mut archive = Archive::new(tar_gz);

println!("Extracting CIFAR-10 dataset...");

for entry in archive.entries()? {

let mut entry = entry?;

let path = entry.path()?.into_owned();

// Extract only files into the correct directory

if let Some(file_name) = path.file_name() {

let file_path = Path::new(dest).join(file_name);

entry.unpack(file_path)?;

}

}

println!("CIFAR-10 dataset downloaded and extracted successfully.");

Ok(())

}

#[tokio::main]

async fn main() -> Result<()> {

// Ensure the CIFAR-10 dataset is downloaded and extracted.

download_cifar10().await?;

// Run the VGG-16 model training.

run_vgg16()

}

// Define the VGG-16 architecture.

#[derive(Debug)]

struct VGG16 {

features: nn::Sequential,

classifier: nn::Sequential,

}

impl VGG16 {

fn new(vs: &nn::Path) -> VGG16 {

let mut features = nn::seq();

let mut in_channels = 3;

// VGG-16 convolutional layers configuration.

let cfg: Vec<Option<i64>> = vec![

Some(64), Some(64), None, // MaxPooling

Some(128), Some(128), None,

Some(256), Some(256), Some(256), None,

Some(512), Some(512), Some(512), None,

Some(512), Some(512), Some(512), None,

];

for layer in cfg {

match layer {

Some(filters) => {

features = features

.add(nn::conv2d(

vs,

in_channels,

filters,

3,

nn::ConvConfig { padding: 1, ..Default::default() },

))

.add_fn(|x| x.relu());

in_channels = filters;

}

None => {

features = features.add_fn(|x| x.max_pool2d(&[2, 2], &[2, 2], &[0, 0], &[1, 1], false)); // MaxPooling

}

}

}

let classifier = nn::seq()

.add(nn::linear(vs, 512, 4096, Default::default()))

.add_fn(|x| x.relu())

.add_fn(|x| x.dropout(0.5, true))

.add(nn::linear(vs, 4096, 4096, Default::default()))

.add_fn(|x| x.relu())

.add_fn(|x| x.dropout(0.5, true))

.add(nn::linear(vs, 4096, 10, Default::default()));

VGG16 { features, classifier }

}

}

impl nn::ModuleT for VGG16 {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

xs.apply(&self.features)

.view([-1, 512]) // Flatten

.apply_t(&self.classifier, train)

}

}

// Function to train and test the VGG-16 model on the CIFAR-10 dataset.

fn run_vgg16() -> Result<()> {

// Load the CIFAR-10 dataset.

let cifar_data = vision::cifar::load_dir("data/cifar10")?;

// Use GPU if available, otherwise use CPU.

let vs = nn::VarStore::new(Device::cuda_if_available());

let net = VGG16::new(&vs.root()); // Initialize the VGG-16 model.

let mut opt = nn::Adam::default().build(&vs, 1e-4)?; // Set up the optimizer.

// Reshape and normalize the training and test images.

let train_images = cifar_data.train_images / 255.0;

let train_labels = cifar_data.train_labels;

let test_images = cifar_data.test_images / 255.0;

let test_labels = cifar_data.test_labels;

// Training loop for the VGG-16 model.

for epoch in 1..=20 {

for (bimages, blabels) in train_images.split(128, 0).into_iter().zip(train_labels.split(128, 0).into_iter()) {

let loss = net.forward_t(&bimages, true).cross_entropy_for_logits(&blabels);

opt.backward_step(&loss); // Backpropagation step.

}

// Calculate and print test accuracy at the end of each epoch.

let test_accuracy = net.batch_accuracy_for_logits(&test_images, &test_labels, vs.device(), 256);

println!("Epoch: {:4}, Test Accuracy: {:5.2}%", epoch, 100. * test_accuracy);

}

Ok(())

}

The code begins by defining a function to download and extract the CIFAR-10 dataset, ensuring the required files are properly placed in the data/cifar10 directory. The VGG16 class implements the VGG-16 architecture, where convolutional layers are added sequentially based on a predefined configuration. Max-pooling layers are interspersed to reduce spatial dimensions, while fully connected layers handle the final classification. In the main function, the dataset is downloaded and loaded using the tch library. Images are normalized, and the model is trained for 20 epochs using the Adam optimizer. Training involves forward propagation, loss computation using cross-entropy, and backpropagation. After each epoch, the model's accuracy on the test set is evaluated and displayed, providing feedback on its performance.

The flexibility of the VGG architecture also allows for modifications. For instance, we can experiment with reducing the number of layers to create a shallower network, or we can adjust the filter sizes to see how the model’s performance changes. These modifications help in understanding the trade-offs between model complexity and performance.

From an industry perspective, VGG continues to be a benchmark architecture, particularly for tasks where interpretability and simplicity are prioritized. Its layer-wise design and regularity make it easy to modify, fine-tune, and experiment with, even though more recent architectures like ResNet and EfficientNet are preferred for computational efficiency. Despite its computational cost, VGG remains a popular choice in scenarios where accuracy is more critical than speed, especially when training is done on powerful hardware.

6.3. ResNet and the Power of Residual Connections

The ResNet (Residual Network) architecture represents a major leap forward in deep learning by addressing a critical issue in training deep neural networks: the vanishing gradient problem. This problem arises when the gradients (used to update weights during backpropagation) become extremely small as they propagate through the layers of a deep network, making it difficult to train very deep networks effectively. ResNet overcomes this limitation through the use of residual connections, or skip connections, which allow the network to bypass certain layers by directly feeding the input into later layers. This innovation enables the training of networks with hundreds of layers, such as ResNet-152, without suffering from the performance degradation typically associated with deeper networks.

Mathematically, the core idea behind ResNet can be formalized as follows: instead of learning an underlying mapping $H(x)$, the network learns a residual mapping $F(x) = H(x) - x$. This allows the model to reformulate the learning task as learning the difference (residual) between the input and the desired output:

$$ y = F(x) + x $$

Here, $F(x)$ represents the transformation learned by a series of convolutional layers, while $x$ is the original input that is added back to the output. This simple but powerful technique allows gradients to flow through the network more effectively, as the identity mapping xxx ensures that information is preserved across layers. In turn, this mitigates the vanishing gradient problem and enables the network to maintain accuracy even as the number of layers increases.

The skip connection mechanism has a profound impact on the stability and efficiency of training deep networks. By directly adding the input to the output of certain layers, ResNet allows gradients to propagate backward through the network more easily, ensuring that they do not diminish to near-zero values. This means that even very deep versions of ResNet, such as ResNet-152, can be trained without encountering the significant degradation in accuracy that would occur in traditional deep networks. This scalability is one of the defining features of ResNet, enabling architectures with depths ranging from ResNet-18 to ResNet-152 and beyond.

One of the key conceptual insights provided by ResNet is the understanding that residual connections allow networks to maintain the flow of information across layers. In traditional deep networks, the deeper the network, the harder it is for information from earlier layers to reach the output. Residual connections solve this by ensuring that at least some part of the input (the identity mapping) is directly passed through to the output. This is particularly important for very deep networks, where maintaining the integrity of information becomes increasingly difficult.

The identity mapping in ResNet plays a crucial role in this architecture. It ensures that the network can easily learn the identity function when needed, meaning that if a deeper layer does not need to transform the input, the network can simply pass the input through unchanged. This flexibility helps prevent overfitting, as the network can focus on learning useful transformations only when necessary.

ResNet's modularity also contributes to its scalability and adaptability. Each residual block is essentially a self-contained unit, consisting of a few convolutional layers and a skip connection. This modularity allows for easy extension of the network by simply adding more blocks, making it straightforward to build deeper models (such as ResNet-50 or ResNet-152) without needing to redesign the entire architecture. This modularity also allows ResNet to be adapted to a wide range of tasks, from image classification to object detection and segmentation.

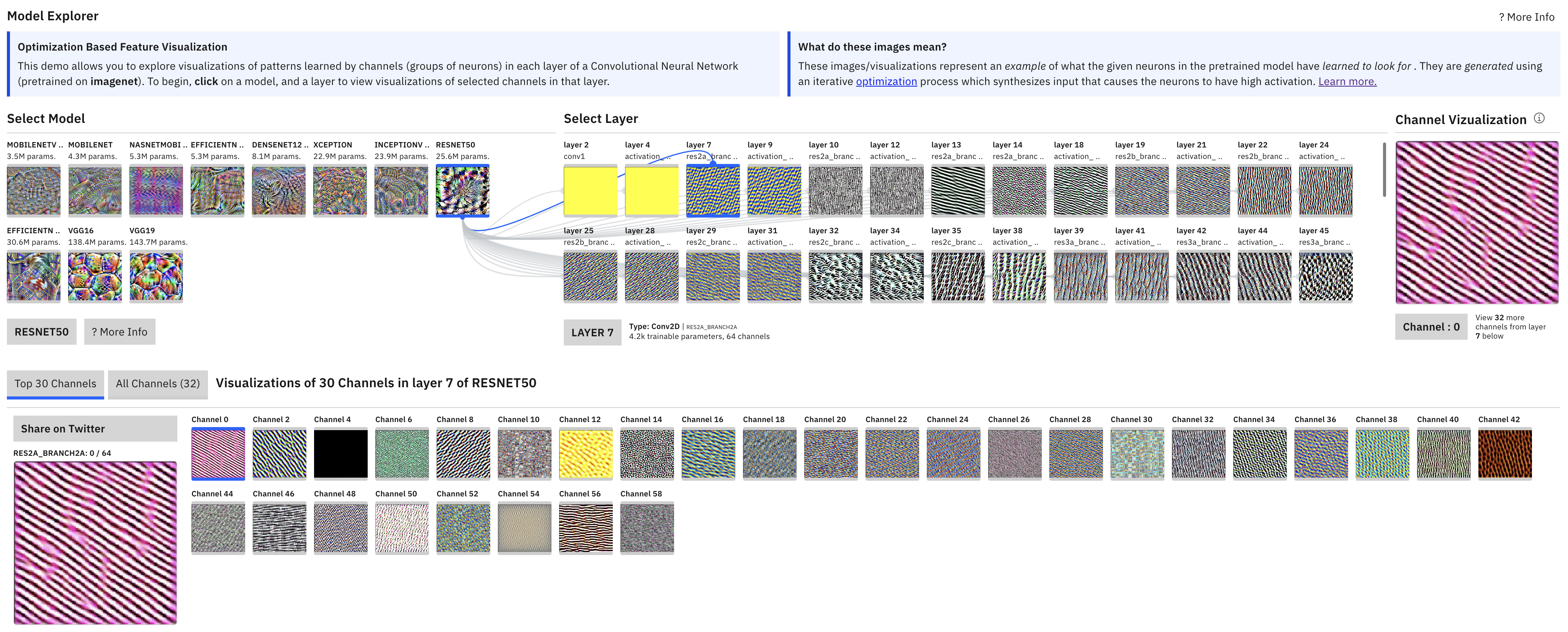

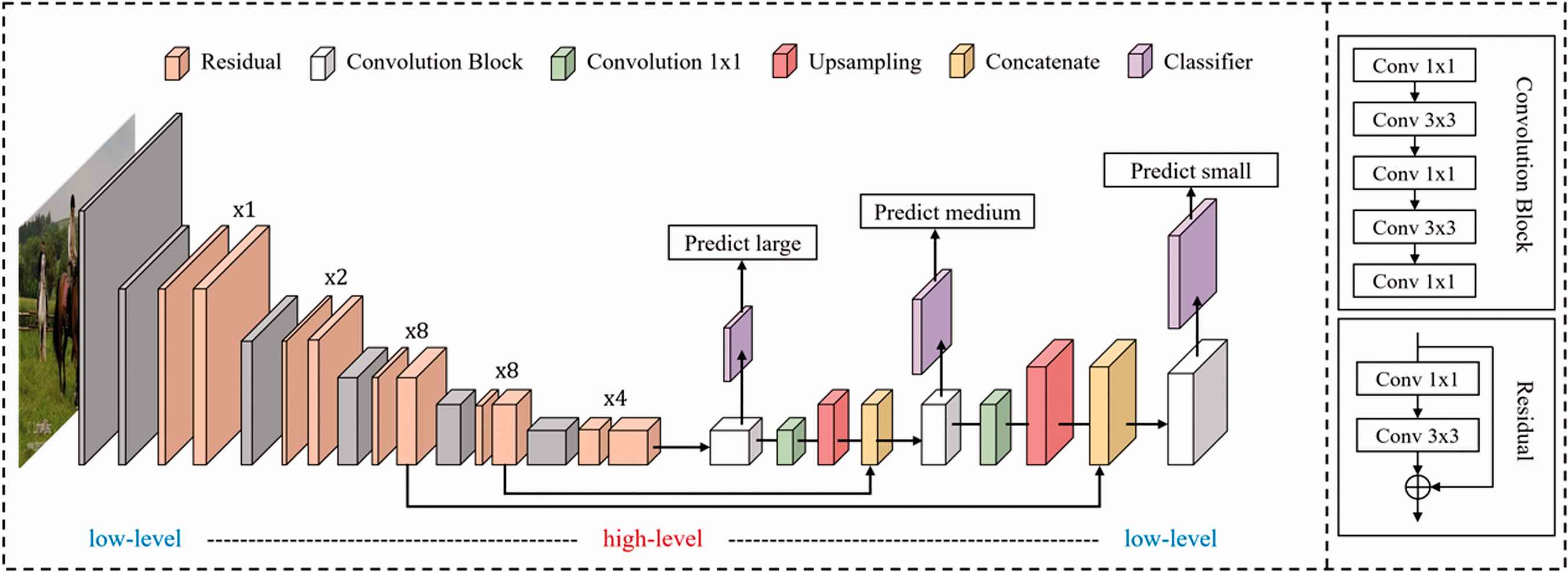

Figure 3: Model visualization of ResNet50 architecture.

Using Rust’s tch-rs library, we can implement a simplified version of ResNet by defining residual blocks and stacking them to create deeper architectures. ResNet-50 is a deep architecture that introduces residual connections to help mitigate the vanishing gradient problem, allowing the network to be much deeper. It utilizes residual learning, where identity mappings (or shortcuts) skip layers, mitigating the vanishing gradient problem in deep networks. This enables deeper networks to be trained efficiently. ResNet-50 consists of 50 layers, including convolutional layers, batch normalization, ReLU activations, and fully connected layers, organized into a series of residual blocks. Each block uses skip connections to directly propagate input features, preserving information and improving gradient flow during training.

Below is the implementation of the ResNet-50 architecture using the tch-rs library for classifying images in the CIFAR-10 dataset. This implementation defines the ResNet-50 architecture, sets up the CIFAR-10 dataset, and uses the Adam optimizer for training. After training, we evaluate the model's accuracy on the test set.

use anyhow::{Result, Context};

use flate2::read::GzDecoder;

use reqwest;

use std::{fs, path::Path};

use tar::Archive;

use tch::{nn, nn::ModuleT, nn::OptimizerConfig, Device, Tensor, vision};

/// URL for the CIFAR-10 dataset

const CIFAR10_URL: &str = "https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz";

/// Function to download and extract the CIFAR-10 dataset.

async fn download_cifar10() -> Result<()> {

let dest = "data/cifar10";

if Path::new(&format!("{}/test_batch.bin", dest)).exists() {

println!("CIFAR-10 dataset already exists, skipping download.");

return Ok(());

}

fs::create_dir_all(dest).context("Failed to create data directory")?;

println!("Downloading CIFAR-10 dataset...");

let response = reqwest::get(CIFAR10_URL).await?;

let bytes = response.bytes().await?;

let tar_gz = GzDecoder::new(&bytes[..]);

let mut archive = Archive::new(tar_gz);

println!("Extracting CIFAR-10 dataset...");

archive.unpack("data")?;

println!("CIFAR-10 dataset downloaded and extracted successfully.");

Ok(())

}

#[tokio::main]

async fn main() -> Result<()> {

// Ensure the CIFAR-10 dataset is downloaded and extracted.

download_cifar10().await?;

// Run the ResNet-50 model training.

run_resnet50()

}

// Define the ResNet-50 architecture.

#[derive(Debug)]

struct ResNet50 {

conv1: nn::Conv2D,

bn1: nn::BatchNorm,

layers: nn::Sequential,

fc: nn::Linear,

}

impl ResNet50 {

fn new(vs: &nn::Path) -> ResNet50 {

let conv1 = nn::conv2d(

vs,

3,

64,

7,

nn::ConvConfig {

stride: 2,

padding: 3,

..Default::default()

},

);

let bn1 = nn::batch_norm2d(vs, 64, Default::default());

let mut layers = nn::seq();

layers = layers

.add(build_residual_block(vs, 64, 256, 3, 1))

.add(build_residual_block(vs, 256, 512, 4, 2))

.add(build_residual_block(vs, 512, 1024, 6, 2))

.add(build_residual_block(vs, 1024, 2048, 3, 2));

let fc = nn::linear(vs, 2048, 10, Default::default());

ResNet50 {

conv1,

bn1,

layers,

fc,

}

}

}

impl nn::ModuleT for ResNet50 {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

xs.apply(&self.conv1)

.apply_t(&self.bn1, train)

.relu()

.max_pool2d(&[3, 3], &[2, 2], &[1, 1], &[1, 1], false)

.apply_t(&self.layers, train)

.adaptive_avg_pool2d(&[1, 1])

.view([-1, 2048])

.apply(&self.fc)

}

}

// Define the Residual Block.

#[derive(Debug)]

struct ResidualBlock {

conv1: nn::Conv2D,

bn1: nn::BatchNorm,

conv2: nn::Conv2D,

bn2: nn::BatchNorm,

conv3: nn::Conv2D,

bn3: nn::BatchNorm,

shortcut: Option<nn::Conv2D>,

}

impl ResidualBlock {

fn new(

vs: &nn::Path,

in_channels: i64,

out_channels: i64,

stride: i64,

shortcut: Option<nn::Conv2D>,

) -> ResidualBlock {

let conv1 = nn::conv2d(vs, in_channels, out_channels / 4, 1, Default::default());

let bn1 = nn::batch_norm2d(vs, out_channels / 4, Default::default());

let conv2 = nn::conv2d(

vs,

out_channels / 4,

out_channels / 4,

3,

nn::ConvConfig { stride, padding: 1, ..Default::default() },

);

let bn2 = nn::batch_norm2d(vs, out_channels / 4, Default::default());

let conv3 = nn::conv2d(vs, out_channels / 4, out_channels, 1, Default::default());

let bn3 = nn::batch_norm2d(vs, out_channels, Default::default());

ResidualBlock {

conv1,

bn1,

conv2,

bn2,

conv3,

bn3,

shortcut,

}

}

fn forward(&self, xs: &Tensor, train: bool) -> Tensor {

let shortcut = match &self.shortcut {

Some(sc) => xs.apply(sc),

None => xs.shallow_clone(),

};

xs.apply(&self.conv1)

.apply_t(&self.bn1, train)

.relu()

.apply(&self.conv2)

.apply_t(&self.bn2, train)

.relu()

.apply(&self.conv3)

.apply_t(&self.bn3, train)

+ shortcut

}

}

// Wrapper for ResidualBlock

#[derive(Debug)]

struct ResidualBlockWrapper {

block: ResidualBlock,

}

impl ResidualBlockWrapper {

fn new(

vs: &nn::Path,

in_channels: i64,

out_channels: i64,

stride: i64,

shortcut: Option<nn::Conv2D>,

) -> Self {

Self {

block: ResidualBlock::new(vs, in_channels, out_channels, stride, shortcut),

}

}

}

impl nn::Module for ResidualBlockWrapper {

fn forward(&self, xs: &Tensor) -> Tensor {

self.block.forward(xs, false)

}

}

// Function to build a series of residual blocks.

fn build_residual_block(

vs: &nn::Path,

in_channels: i64,

out_channels: i64,

blocks: i64,

stride: i64,

) -> nn::Sequential {

let mut layer = nn::seq();

let shortcut = if stride != 1 || in_channels != out_channels {

Some(nn::conv2d(

vs,

in_channels,

out_channels,

1,

nn::ConvConfig {

stride,

..Default::default()

},

))

} else {

None

};

layer = layer.add(ResidualBlockWrapper::new(

vs,

in_channels,

out_channels,

stride,

shortcut,

));

for _ in 1..blocks {

layer = layer.add(ResidualBlockWrapper::new(vs, out_channels, out_channels, 1, None));

}

layer

}

// Function to train and test the ResNet-50 model on the CIFAR-10 dataset.

fn run_resnet50() -> Result<()> {

// Load the CIFAR-10 dataset.

let cifar_data = vision::cifar::load_dir("data/cifar10")?;

// Use GPU if available, otherwise use CPU.

let vs = nn::VarStore::new(Device::cuda_if_available());

let net = ResNet50::new(&vs.root()); // Initialize the ResNet-50 model.

let mut opt = nn::Sgd::default().build(&vs, 0.01)?; // Set up SGD optimizer.

// Reshape and normalize the training and test images.

let train_images = cifar_data.train_images / 255.0;

let train_labels = cifar_data.train_labels;

let test_images = cifar_data.test_images / 255.0;

let test_labels = cifar_data.test_labels;

// Training loop for the ResNet-50 model.

for epoch in 1..=20 {

for (bimages, blabels) in train_images.split(128, 0).into_iter().zip(train_labels.split(128, 0).into_iter()) {

let loss = net.forward_t(&bimages, true).cross_entropy_for_logits(&blabels);

opt.backward_step(&loss); // Backpropagation step.

}

// Calculate and print test accuracy at the end of each epoch.

let test_accuracy = net.batch_accuracy_for_logits(&test_images, &test_labels, vs.device(), 256);

println!("Epoch: {:4}, Test Accuracy: {:5.2}%", epoch, 100. * test_accuracy);

}

Ok(())

}

The code implements a ResNet-50 model for classifying images in the CIFAR-10 dataset. It begins by downloading and preparing the CIFAR-10 dataset. The ResNet50 class defines the architecture, including initial convolutional and batch normalization layers, multiple residual block layers, and a fully connected output layer for classification. Residual blocks are encapsulated in the ResidualBlock and ResidualBlockWrapper structs, enabling compatibility with the nn::Sequential API. The training loop loads batches of CIFAR-10 images, computes loss using cross-entropy, and updates model weights using stochastic gradient descent (SGD). Test accuracy is evaluated after each epoch, demonstrating the model's performance. The implementation leverages the tch library for PyTorch-like tensor operations and neural network building in Rust.

ResNet is a family of CNN architectures designed to mitigate the vanishing gradient problem through residual connections. The different variants, such as ResNet18, ResNet34, ResNet50, ResNet101, and ResNet152, differ primarily in the depth of the network, i.e., the number of layers. ResNet18 has 18 layers, ResNet34 has 34 layers, ResNet50 features 50 layers, ResNet101 has 101 layers, and ResNet152 is the deepest with 152 layers. These variants offer a trade-off between computational cost and accuracy, with deeper models generally achieving higher performance at the expense of increased complexity and training time.

By experimenting with different ResNet depths (e.g., ResNet-18, ResNet-50, ResNet-101), we can analyze the trade-offs between depth, accuracy, and computational cost. Deeper networks tend to perform better on larger, more complex datasets, but they also require more computational resources in terms of memory and processing power. Shallower networks, like ResNet-18, may be sufficient for smaller datasets or less complex tasks, offering a good balance between performance and efficiency.

[dependencies]

accelerate-src = "0.3.2"

anyhow = "1.0"

candle-core = "0.8.0"

candle-examples = "0.8.0"

candle-nn = "0.8.0"

candle-transformers = "0.8.0"

clap = { version = "4", features = ["derive"] }

hf-hub = "0.3.2"

use candle_core::{DType, IndexOp, D};

use candle_nn::{Module, VarBuilder};

use candle_transformers::models::resnet;

use clap::{Parser, ValueEnum};

#[derive(Clone, Copy, Debug, ValueEnum)]

enum Which {

#[value(name = "18")]

Resnet18,

#[value(name = "34")]

Resnet34,

#[value(name = "50")]

Resnet50,

#[value(name = "101")]

Resnet101,

#[value(name = "152")]

Resnet152,

}

#[derive(Parser)]

struct Args {

#[clap(long)]

model: Option<String>,

#[clap(long)]

image: String,

/// Run on CPU rather than on GPU.

#[clap(long)]

cpu: bool,

/// Variant of the model to use.

#[clap(value_enum, long, default_value_t = Which::Resnet18)]

which: Which,

}

pub fn main() -> anyhow::Result<()> {

let args = Args::parse();

let device = candle_examples::device(args.cpu)?;

let image = candle_examples::imagenet::load_image224(args.image)?.to_device(&device)?;

println!("loaded image {image:?}");

let model_file = match args.model {

None => {

let api = hf_hub::api::sync::Api::new()?;

let api = api.model("lmz/candle-resnet".into());

let filename = match args.which {

Which::Resnet18 => "resnet18.safetensors",

Which::Resnet34 => "resnet34.safetensors",

Which::Resnet50 => "resnet50.safetensors",

Which::Resnet101 => "resnet101.safetensors",

Which::Resnet152 => "resnet152.safetensors",

};

api.get(filename)?

}

Some(model) => model.into(),

};

let vb = unsafe { VarBuilder::from_mmaped_safetensors(&[model_file], DType::F32, &device)? };

let class_count = candle_examples::imagenet::CLASS_COUNT as usize;

let model = match args.which {

Which::Resnet18 => resnet::resnet18(class_count, vb)?,

Which::Resnet34 => resnet::resnet34(class_count, vb)?,

Which::Resnet50 => resnet::resnet50(class_count, vb)?,

Which::Resnet101 => resnet::resnet101(class_count, vb)?,

Which::Resnet152 => resnet::resnet152(class_count, vb)?,

};

println!("model built");

let logits = model.forward(&image.unsqueeze(0)?)?;

let prs = candle_nn::ops::softmax(&logits, D::Minus1)?

.i(0)?

.to_vec1::<f32>()?;

let mut prs = prs.iter().enumerate().collect::<Vec<_>>();

prs.sort_by(|(_, p1), (_, p2)| p2.total_cmp(p1));

for &(category_idx, pr) in prs.iter().take(5) {

println!(

"{:24}: {:.2}%",

candle_examples::imagenet::CLASSES[category_idx],

100. * pr

);

}

Ok(())

}

In the sample codes, the candle crate is used for defining and training these models using Rust. candle_nn provides the neural network modules and operations, while candle_transformers includes the pre-defined ResNet models. The code uses the clap crate for argument parsing, allowing users to specify the image file, the model variant (ResNet18, ResNet34, etc.), and whether to run on a CPU or GPU. It loads the image, fetches the model weights, and uses the VarBuilder to load the model into memory. The selected ResNet variant is then initialized and used to classify the image. The classification output (logits) is passed through a softmax operation to obtain probabilities, and the top 5 predicted categories are displayed with their respective probabilities. This implementation showcases a practical use of Rust for running pre-trained deep learning models.

From an industry perspective, ResNet has become a standard architecture for image recognition tasks and is widely used in various applications, from medical imaging to autonomous vehicles. Its modular design, scalability, and ability to train deep networks without performance degradation have made it one of the most successful and widely adopted architectures in deep learning.

In conclusion, ResNet’s introduction of residual connections has revolutionized the training of deep networks, making it possible to build models that are both deep and highly performant. By implementing and experimenting with ResNet in Rust, developers can take advantage of this architecture's powerful design principles while benefiting from Rust’s performance and memory safety features, making it ideal for applications that require both speed and reliability.

6.4. Inception Networks and Multi-Scale Feature Extraction

Inception networks represent a significant advancement in Convolutional Neural Network (CNN) architectures by introducing the concept of multi-scale feature extraction within a single layer. The core idea of Inception networks is to process input data at multiple scales simultaneously by applying various convolutional and pooling operations in parallel. This approach enables the model to capture both fine-grained details and broader, coarser patterns within the same layer, which is especially important when the features in the data vary widely in size. The flexibility and efficiency of Inception networks have made them highly effective for tasks like image classification and object detection.

The original Inception module (also known as Inception v1) is composed of multiple parallel paths, each of which applies a different type of operation to the input data. These paths include $1 \times 1$, $3 \times 3$, and $5 \times 5$ convolutions, along with a max pooling operation. The results from these different paths are concatenated together to form the output. Mathematically, if $x$ represents the input and $f_{1 \times 1}(x), f_{3 \times 3}(x), f_{5 \times 5}(x)$, and pooling $p(x)$ are the outputs from the different paths, the output of the Inception module is the concatenation of these results:

$$ y = \text{Concat}(f_{1 \times 1}(x), f_{3 \times 3}(x), f_{5 \times 5}(x), p(x)) $$

This approach allows the network to learn both local features (using smaller filters) and global patterns (using larger filters) simultaneously, providing a robust mechanism for handling a wide variety of feature sizes in the data.

A key innovation in the Inception v1 architecture was the use of $1 \times 1$ convolutions to reduce the dimensionality of the input before applying larger filters like $3 \times 3$ and $5 \times 5$. This dimensionality reduction technique significantly improves the computational efficiency of the network by reducing the number of input channels for the larger convolutions, thereby lowering the number of parameters and the computational cost. Formally, a $1 \times 1$ convolution can be expressed as:

$$ y[i,j] = \sum_{c=1}^{C} W_c x[i,j,c] $$

where $x[i,j,c]$ represents the input pixel value at location $(i,j)$ and channel $c$, and $W_c$ is the learned weight for channel $c$. This operation reduces the number of channels in the input while preserving the spatial resolution, making it an effective tool for controlling the computational complexity of the network.

The evolution of Inception modules from v1 to v4 introduced various refinements and optimizations to further improve both performance and efficiency. For example, Inception v2 introduced factorized convolutions, where larger filters (like $5 \times 5$) are replaced with multiple smaller filters (such as two $3 \times 3$ convolutions). This reduces the number of parameters while maintaining the network's ability to capture larger-scale patterns. Inception v3 and v4 continued this trend, introducing additional architectural improvements, such as using batch normalization to stabilize training and label smoothing to prevent overfitting. The Inception-ResNet variant combined the strengths of Inception with the residual connections from ResNet, allowing for even deeper and more powerful networks.

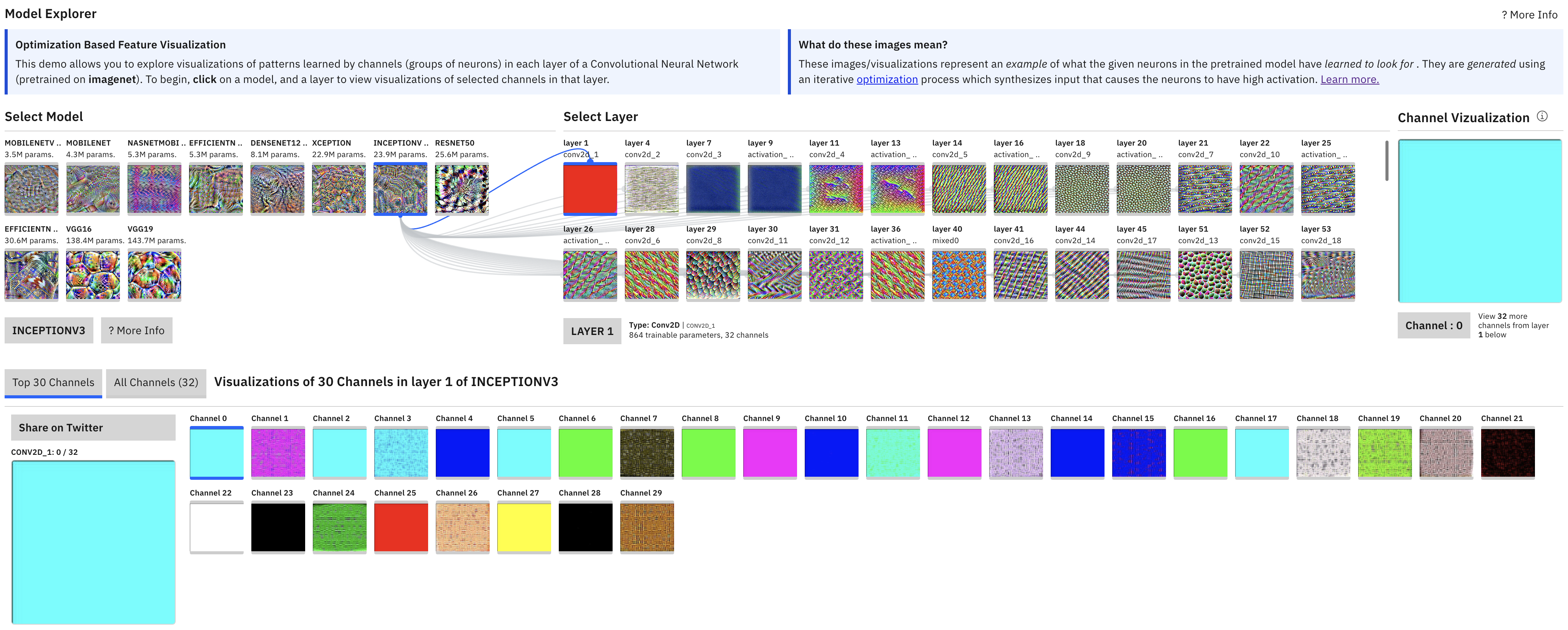

Figure 4: Visualization of InceptionV3 architecture.

From a conceptual perspective, the multi-scale feature extraction provided by Inception modules is critical for handling complex patterns in data. By applying convolutions with different filter sizes in parallel, the network can learn to recognize features of varying sizes without needing to manually tune the filter sizes for specific tasks. This architectural diversity within the same layer allows Inception networks to capture both fine details and broader structures, making them highly effective for tasks that require a nuanced understanding of the input data, such as object detection or scene recognition.

At the same time, Inception networks strike a balance between computational efficiency and model performance. The use of dimensionality reduction with $1 \times 1$ convolutions significantly reduces the number of parameters, making the network more efficient without sacrificing performance. This makes Inception networks particularly well-suited for tasks that require high accuracy but also need to be computationally feasible, such as deployment in resource-constrained environments like mobile devices.

The InceptionV4 architecture builds upon the strengths of previous Inception networks by integrating residual connections to improve gradient flow, making it highly scalable for deep networks. It consists of multiple modules: Stem, Inception-A, Inception-B, Inception-C, Reduction-A, and Reduction-B. The Stem acts as a feature extractor, while the Inception modules focus on efficiently capturing spatial features by combining convolutions of different sizes. The Reduction modules downsample the spatial dimensions and increase feature richness. This modular design enables effective representation learning, making InceptionV4 a powerful model for image classification tasks.

use anyhow::{Result, Context};

use flate2::read::GzDecoder;

use reqwest;

use std::{fs, path::Path};

use tar::Archive;

use tch::{nn, nn::ModuleT, nn::OptimizerConfig, Device, Tensor, vision};

/// URL for the CIFAR-10 dataset

const CIFAR10_URL: &str = "https://www.cs.toronto.edu/~kriz/cifar-10-binary.tar.gz";

/// Function to download and extract the CIFAR-10 dataset.

async fn download_cifar10() -> Result<()> {

let dest = "data/cifar10";

if Path::new(dest).exists() {

println!("CIFAR-10 dataset already exists, skipping download.");

return Ok(());

}

fs::create_dir_all(dest).context("Failed to create data directory")?;

println!("Downloading CIFAR-10 dataset...");

let response = reqwest::get(CIFAR10_URL).await?;

let bytes = response.bytes().await?;

let tar_gz = GzDecoder::new(&bytes[..]);

let mut archive = Archive::new(tar_gz);

println!("Extracting CIFAR-10 dataset...");

archive.unpack("data")?;

println!("CIFAR-10 dataset downloaded and extracted successfully.");

Ok(())

}

/// Define the Inception V4 architecture.

#[derive(Debug)]

struct InceptionV4 {

stem: nn::Sequential,

inception_a: nn::Sequential,

inception_b: nn::Sequential,

inception_c: nn::Sequential,

reduction_a: nn::Sequential,

reduction_b: nn::Sequential,

fc: nn::Linear,

}

impl InceptionV4 {

fn new(vs: &nn::Path) -> InceptionV4 {

let stem = nn::seq()

.add(nn::conv2d(vs, 3, 32, 3, Default::default()))

.add(nn::conv2d(vs, 32, 32, 3, nn::ConvConfig { stride: 2, ..Default::default() }))

.add(nn::conv2d(vs, 32, 64, 3, nn::ConvConfig { padding: 1, ..Default::default() }));

let inception_a = InceptionV4::inception_a_block(vs, 64, 32);

let inception_b = InceptionV4::inception_b_block(vs, 192, 64);

let inception_c = InceptionV4::inception_c_block(vs, 384, 128);

let reduction_a = InceptionV4::reduction_a_block(vs, 192);

let reduction_b = InceptionV4::reduction_b_block(vs, 384);

let fc = nn::linear(vs, 1024, 10, Default::default());

InceptionV4 {

stem,

inception_a,

inception_b,

inception_c,

reduction_a,

reduction_b,

fc,

}

}

fn inception_a_block(vs: &nn::Path, in_channels: i64, pool_channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::conv2d(vs, in_channels, 64, 1, Default::default()))

.add(nn::conv2d(vs, in_channels, 48, 1, Default::default()))

.add(nn::conv2d(vs, 48, 64, 5, nn::ConvConfig { padding: 2, ..Default::default() }))

.add(nn::conv2d(vs, in_channels, 64, 1, Default::default()))

.add(nn::conv2d(vs, 64, 96, 3, nn::ConvConfig { padding: 1, ..Default::default() }))

.add(nn::conv2d(vs, 96, 96, 3, nn::ConvConfig { padding: 1, ..Default::default() }))

.add(nn::conv2d(vs, in_channels, pool_channels, 1, Default::default()))

}

fn inception_b_block(vs: &nn::Path, in_channels: i64, channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::conv2d(vs, in_channels, channels, 1, Default::default()))

.add(nn::conv2d(vs, channels, channels, 7, nn::ConvConfig { padding: 3, ..Default::default() }))

}

fn inception_c_block(vs: &nn::Path, in_channels: i64, channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::conv2d(vs, in_channels, channels, 1, Default::default()))

.add(nn::conv2d(vs, channels, channels, 3, nn::ConvConfig { padding: 1, ..Default::default() }))

}

fn reduction_a_block(vs: &nn::Path, in_channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::conv2d(vs, in_channels, 384, 3, nn::ConvConfig { stride: 2, ..Default::default() }))

.add(nn::conv2d(vs, in_channels, 64, 1, Default::default()))

.add(nn::conv2d(vs, 64, 96, 3, nn::ConvConfig { padding: 1, ..Default::default() }))

.add(nn::conv2d(vs, 96, 96, 3, nn::ConvConfig { stride: 2, ..Default::default() }))

}

fn reduction_b_block(vs: &nn::Path, in_channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::conv2d(vs, in_channels, 192, 1, Default::default()))

.add(nn::conv2d(vs, 192, 320, 3, nn::ConvConfig { stride: 2, ..Default::default() }))

}

}

impl nn::ModuleT for InceptionV4 {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

xs.apply_t(&self.stem, train)

.apply_t(&self.inception_a, train)

.apply_t(&self.reduction_a, train)

.apply_t(&self.inception_b, train)

.apply_t(&self.reduction_b, train)

.apply_t(&self.inception_c, train)

.adaptive_avg_pool2d(&[1, 1])

.view([-1, 1024])

.apply(&self.fc)

}

}

/// Train and test the Inception V4 model on CIFAR-10.

fn run_inception_v4() -> Result<()> {

// Load CIFAR-10 dataset

let cifar_data = vision::cifar::load_dir("data/cifar10")?;

// Use GPU if available, otherwise CPU

let vs = nn::VarStore::new(Device::cuda_if_available());

let net = InceptionV4::new(&vs.root());

let mut opt = nn::Adam::default().build(&vs, 1e-3)?;

let train_images = cifar_data.train_images / 255.0;

let train_labels = cifar_data.train_labels;

let test_images = cifar_data.test_images / 255.0;

let test_labels = cifar_data.test_labels;

// Training loop

for epoch in 1..=20 {

for (bimages, blabels) in train_images.split(128, 0).into_iter().zip(train_labels.split(128, 0).into_iter()) {

let loss = net.forward_t(&bimages, true).cross_entropy_for_logits(&blabels);

opt.backward_step(&loss);

}

// Test accuracy

let test_accuracy = net.batch_accuracy_for_logits(&test_images, &test_labels, vs.device(), 256);

println!("Epoch: {:4}, Test Accuracy: {:5.2}%", epoch, 100. * test_accuracy);

}

Ok(())

}

#[tokio::main]

async fn main() -> Result<()> {

download_cifar10().await?;

run_inception_v4()

}

The provided code is a Rust program that downloads the CIFAR-10 dataset, extracts it, and trains an Inception V4 model on the dataset using the tch crate, which provides bindings for PyTorch in Rust. The download_cifar10 function asynchronously fetches the CIFAR-10 dataset from the specified URL, extracts the tar.gz file using flate2 and tar crates, and saves the dataset to the local "data/cifar10" directory. The InceptionV4 struct defines the architecture of the Inception V4 model, with various layers including convolutions, inception blocks, and reductions. The forward_t method implements the forward pass through the network. The run_inception_v4 function sets up the model, optimizer, and training loop, and then trains the model for 20 epochs using the CIFAR-10 training set while evaluating the model's accuracy on the test set after each epoch. The main function orchestrates the process by first downloading the dataset and then calling the training function. This implementation utilizes GPU if available, thanks to the Device::cuda_if_available function from the tch crate, enabling accelerated computation for deep learning tasks.

To fully leverage the power of Inception networks, training them on large, complex datasets like ImageNet is essential, as their multi-scale feature extraction capabilities allow them to capture both detailed and abstract features from diverse image sets. Inception networks are particularly advantageous due to their ability to achieve high accuracy while remaining computationally efficient, thanks to techniques like dimensionality reduction and factorized convolutions. This balance between performance and efficiency makes them ideal for large-scale applications in industry, such as image classification, object detection, and real-time video analysis, where both accuracy and speed are critical. Additionally, developers can experiment with custom Inception modules by modifying paths and operations, such as replacing 5x5 convolutions with consecutive 3x3 convolutions to reduce parameters or adding dilated convolutions to capture larger-scale features. By exploring these variations, developers can optimize Inception networks for specific tasks or datasets, enhancing their performance, efficiency, or both.

In summary, Inception networks have become a cornerstone of modern CNN architectures, providing a powerful mechanism for extracting features at multiple scales within a single layer. Through innovations such as multi-scale feature extraction and dimensionality reduction, Inception networks strike a balance between computational efficiency and model performance, making them highly versatile for a wide range of applications. Implementing Inception modules in Rust allows developers to explore these concepts while benefiting from Rust’s efficiency and safety features, making it an excellent choice for high-performance machine learning applications.

6.5. DenseNet and Feature Reuse

The DenseNet (Densely Connected Convolutional Networks) architecture is a modern CNN design that introduces the concept of dense connectivity between layers. Unlike traditional CNNs, where each layer passes its output to the next layer sequentially, DenseNet connects each layer to every other layer in a feed-forward fashion. This design encourages feature reuse, allowing the network to propagate both the original input and the learned features from previous layers forward through the network. As a result, DenseNet achieves high accuracy with fewer parameters and improved gradient flow, making it highly efficient.

Mathematically, the output of the $l^{th}$ layer in a DenseNet is defined as:

$$x_l = H_l([x_0, x_1, ..., x_{l-1}])$$

where $[x_0, x_1, ..., x_{l-1}]$ represents the concatenation of the feature maps from all previous layers, and $H_l(\cdot)$ is the operation (e.g., convolution, activation) applied at the current layer. This formulation contrasts with traditional CNNs, where each layer receives only the output of the previous layer. By concatenating the outputs of all preceding layers, DenseNet promotes feature reuse, allowing the model to use earlier features in later stages of the network.

One of the key components of DenseNet is the concept of a growth rate. The growth rate controls how much information each new layer contributes to the network. A smaller growth rate means that each layer adds a limited number of new feature maps, leading to more compact models. Conversely, a larger growth rate increases the number of features learned by each layer, potentially improving performance but at the cost of increased model size. The number of feature maps at layer lll in a DenseNet is given by:

$$f_l = f_0 + l \cdot k$$

where $f_0$ is the number of input feature maps, $l$ is the layer index, and $k$ is the growth rate. This linear growth ensures that the model remains manageable in size, even as the depth increases.

The dense blocks in DenseNet, which consist of several layers with dense connectivity, play a crucial role in improving gradient flow and feature propagation. By ensuring that each layer receives the gradients from all preceding layers, DenseNet mitigates the vanishing gradient problem and allows for more effective training of deep networks. Additionally, by reusing features across layers, DenseNet reduces the need to learn redundant features, leading to more parameter-efficient models.

From a conceptual perspective, DenseNet’s dense connectivity promotes feature reuse, which is a key innovation that reduces the total number of parameters while maintaining high accuracy. In traditional networks, each layer learns new features independently, often resulting in redundant feature extraction. DenseNet, by contrast, allows each layer to build on previously learned features, ensuring that the model can extract and propagate important features throughout the network. This reuse of features also enables DenseNet to achieve competitive performance with fewer parameters compared to architectures like ResNet.

The growth rate is an important hyperparameter in DenseNet that balances model complexity and performance. By controlling how many new features each layer adds, the growth rate influences the size of the model and its ability to learn. A smaller growth rate results in more compact models with fewer parameters, while a larger growth rate increases the representational capacity of the model but also its computational requirements. In practice, choosing the right growth rate depends on the specific task and the computational resources available.

Another advantage of DenseNet is its ability to achieve high accuracy with fewer parameters. By reusing features across layers, DenseNet reduces the redundancy that often arises in deeper networks, allowing the model to learn more efficiently. This is particularly valuable in applications where computational resources are limited, as DenseNet can deliver strong performance without requiring excessively large models.

Figure 5: Visualization of DenseNet121 architecture.

Here’s the implementation of the DenseNet-121 architecture using the tch-rs library for the ImageNet dataset. DenseNet is a deep learning architecture where each layer is connected to every other layer in a feed-forward manner. This connectivity encourages feature reuse, making the network more efficient and reducing the number of parameters. DenseNet-121 leverages dense connectivity between layers. Unlike traditional CNNs, where each layer receives input only from the previous layer, DenseNet uses a dense block where each layer receives input from all previous layers in that block. This results in more efficient use of features and a reduction in the number of parameters. DenseNet-121 specifically refers to a version of the DenseNet architecture with 121 layers. It includes multiple dense blocks, transition layers to reduce feature map dimensions, and a final global average pooling layer followed by a classifier for tasks like image classification.

use tch::{nn, nn::ModuleT, Device, Tensor};

/// Define the DenseNet-121 architecture.

#[derive(Debug)]

struct DenseNet121 {

features: nn::Sequential,

classifier: nn::Linear,

}

impl DenseNet121 {

fn new(vs: &nn::Path) -> DenseNet121 {

let mut features = nn::seq();

// Initial Convolution

features = features

.add(nn::conv2d(vs, 3, 64, 7, nn::ConvConfig { stride: 2, padding: 3, ..Default::default() }))

.add(nn::batch_norm2d(vs, 64, Default::default()))

.add_fn(|x| x.relu())

.add_fn(|x| x.max_pool2d(&[3, 3], &[2, 2], &[1, 1], &[1, 1], false));

// Dense Blocks and Transition Layers

features = features

.add(Self::dense_block(vs, 6, 64, 32))

.add(Self::transition_layer(vs, 256, 128))

.add(Self::dense_block(vs, 12, 128, 32))

.add(Self::transition_layer(vs, 512, 256))

.add(Self::dense_block(vs, 24, 256, 32))

.add(Self::transition_layer(vs, 1024, 512))

.add(Self::dense_block(vs, 16, 512, 32))

.add_fn(|x| x.adaptive_avg_pool2d(&[1, 1]));

// Classifier

let classifier = nn::linear(vs, 512, 10, Default::default());

DenseNet121 { features, classifier }

}

fn dense_block(vs: &nn::Path, num_layers: usize, in_channels: i64, growth_rate: i64) -> nn::Sequential {

let mut block = nn::seq();

let mut channels = in_channels;

for _ in 0..num_layers {

block = block.add(Self::dense_layer(vs, channels, growth_rate));

channels += growth_rate;

}

block

}

fn dense_layer(vs: &nn::Path, in_channels: i64, growth_rate: i64) -> nn::Sequential {

nn::seq()

.add(nn::batch_norm2d(vs, in_channels, Default::default()))

.add_fn(|x| x.relu())

.add(nn::conv2d(vs, in_channels, growth_rate, 3, nn::ConvConfig { padding: 1, ..Default::default() }))

}

fn transition_layer(vs: &nn::Path, in_channels: i64, out_channels: i64) -> nn::Sequential {

nn::seq()

.add(nn::batch_norm2d(vs, in_channels, Default::default()))

.add_fn(|x| x.relu())

.add(nn::conv2d(vs, in_channels, out_channels, 1, Default::default()))

.add_fn(|x| x.avg_pool2d(&[2, 2], &[2, 2], &[0, 0], &[1, 1], false))

}

}

impl nn::ModuleT for DenseNet121 {

fn forward_t(&self, xs: &Tensor, train: bool) -> Tensor {

xs.apply_t(&self.features, train)

.view([-1, 512])

.apply(&self.classifier)

}

}

The code snippet defines the DenseNet-121 architecture using the Rust library tch, which provides tools for deep learning. It starts by defining a structure for the network, DenseNet121, which includes two main components: features (a sequential block of layers) and classifier (a fully connected layer). The features block includes an initial convolution layer, followed by dense blocks, transition layers, and global average pooling. The dense_block function builds a block of layers where each new layer is connected to all previous ones, while the transition_layer function reduces the spatial dimensions of the feature maps. Finally, the forward_t method implements the forward pass, applying the feature layers followed by the classifier to produce the output.

When experimenting with DenseNet, developers can adjust the growth rate and the number of layers in each dense block to optimize the model for different tasks. A smaller growth rate will result in a more compact model with fewer parameters, while a larger growth rate will increase the model’s representational power. However, care must be taken to balance model complexity with computational efficiency, especially when deploying models in resource-constrained environments.

DenseNet is widely used in industry due to its ability to achieve high accuracy with relatively few parameters, making it ideal for applications where both performance and efficiency are critical. For example, DenseNet has been applied in medical imaging tasks, where accurate feature extraction is crucial, but computational resources may be limited. DenseNet’s compactness and efficiency also make it a popular choice for real-time applications, such as video analysis and autonomous systems.

In conclusion, DenseNet’s dense connectivity and feature reuse enable it to achieve high accuracy with fewer parameters, making it a highly efficient architecture for deep learning. Through the use of growth rates, DenseNet balances model size and performance, and its dense blocks promote effective gradient flow and feature propagation. Implementing DenseNet in Rust provides an opportunity to explore these innovations while taking advantage of Rust’s robust performance capabilities, making it an ideal choice for both research and industry applications.

6.6. EfficientNet and Model Scaling

EfficientNet is a family of convolutional neural network (CNN) models introduced in the paper "EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks" (2019) by Mingxing Tan and Quoc V. Le. The key idea behind EfficientNet is to improve the accuracy of deep learning models while maintaining computational efficiency. Traditionally, model scaling—whether increasing depth, width, or resolution—has been performed in an ad-hoc manner, without a structured method for optimizing the trade-offs between computational cost and model performance. EfficientNet addresses this by introducing a compound scaling strategy that uniformly scales depth, width, and resolution together, based on a compound coefficient. This scaling strategy results in better model performance with fewer parameters and reduced computational costs, compared to models that scale these factors independently.

In terms of performance, EfficientNet sets a new benchmark, outperforming older models like ResNet, DenseNet, and NASNet on datasets such as ImageNet, while requiring fewer parameters and FLOPs (floating point operations). The largest model in the EfficientNet family, EfficientNet-B7, achieves near-state-of-the-art accuracy with far fewer resources compared to its counterparts. This efficiency makes EfficientNet particularly valuable for real-world applications, especially in resource-constrained environments where both accuracy and computational efficiency are critical. EfficientNet's success has inspired further research into efficient model architectures, making it a cornerstone in the evolution of deep learning models that balance high accuracy with low computational demands.

EfficientNet represents a major breakthrough in deep learning architectures by introducing a novel method called compound scaling, which balances three key dimensions of a neural network: depth, width, and resolution. Traditional CNN architectures typically scale one of these dimensions independently (e.g., increasing depth or width), which can lead to inefficient models that are either too large or fail to leverage computational resources effectively. EfficientNet addresses this by proposing a compound scaling method that scales all three dimensions in a balanced way, leading to more efficient models that achieve state-of-the-art performance while using fewer parameters and less computational power.

At the heart of EfficientNet's scaling method is the compound coefficient $\phi$, which determines how much the network's depth, width, and input resolution should be scaled. Formally, the scaling for each dimension is defined as follows:

$$ \text{depth scale} = \alpha^\phi, \quad \text{width scale} = \beta^\phi, \quad \text{resolution scale} = \gamma^\phi $$

Here, $\alpha$, $\beta$, and $\gamma$ are constants that control the rate of scaling for depth, width, and resolution, respectively, while $\phi$ is the compound scaling factor. These constants are chosen such that:

$$ \alpha \cdot \beta^2 \cdot \gamma^2 \approx 2 $$

This constraint ensures that the model grows in a balanced way, doubling the computational cost while maintaining a balanced increase in all three dimensions. In this way, EfficientNet avoids over-expanding one dimension at the expense of others, leading to a more efficient network.

EfficientNet is a state-of-the-art convolutional neural network (CNN) architecture that introduces a novel building block known as the Mobile Inverted Bottleneck Convolution (MBConv). Inspired by MobileNetV2’s depthwise separable convolutions, MBConv enhances computational efficiency while preserving representational power. It achieves this by using an expansion layer to increase the number of channels, followed by depthwise convolutions and a projection layer to reduce the channels back down. This structure reduces the computational load compared to traditional convolutions, allowing for more efficient use of resources without sacrificing accuracy. The EfficientNet family includes models from EfficientNet-B0, the baseline, to EfficientNet-B7, with each successive model scaling up in terms of depth, width, and input resolution. The key innovation behind EfficientNet is its compound scaling method, which applies a balanced scaling of depth, width, and resolution to achieve better performance without the computational explosion typically seen when scaling networks in a traditional way.

The compound scaling strategy in EfficientNet enables the models to outperform other architectures with similar parameter counts by scaling all three dimensions (depth, width, and resolution) proportionally. The baseline model, EfficientNet-B0, is optimized through a process known as Neural Architecture Search (NAS), which automates the discovery of the best architecture for a given task. NAS searches through a vast space of possible architectures to find the most efficient design. Once the optimal baseline model is discovered, the compound scaling method is applied to create larger versions of the model, from EfficientNet-B1 to EfficientNet-B7, which increasingly scale up the network's depth, width, and input resolution. This results in models that deliver high performance across a wide range of applications, from mobile devices with limited computational power (EfficientNet-B0 or B1) to large-scale server environments where accuracy is paramount (EfficientNet-B7).

EfficientNet’s approach to scaling and NAS offers several advantages over traditional methods. In conventional networks, increasing the depth or width of a model can improve performance, but often at the cost of increased computational complexity and diminishing returns. EfficientNet’s compound scaling method addresses this issue by scaling the network in a balanced manner, ensuring that the model grows efficiently and remains computationally feasible. This makes EfficientNet one of the most resource-efficient architectures, offering excellent performance even with fewer parameters compared to other models like ResNet or DenseNet. Furthermore, this flexibility allows practitioners to choose a version of EfficientNet that best fits their specific use case, whether it's for high-efficiency mobile applications or high-accuracy server-based deployments. The combination of NAS and compound scaling is what enables EfficientNet to achieve superior accuracy and efficiency, making it a versatile and high-performing model for both research and real-world applications.

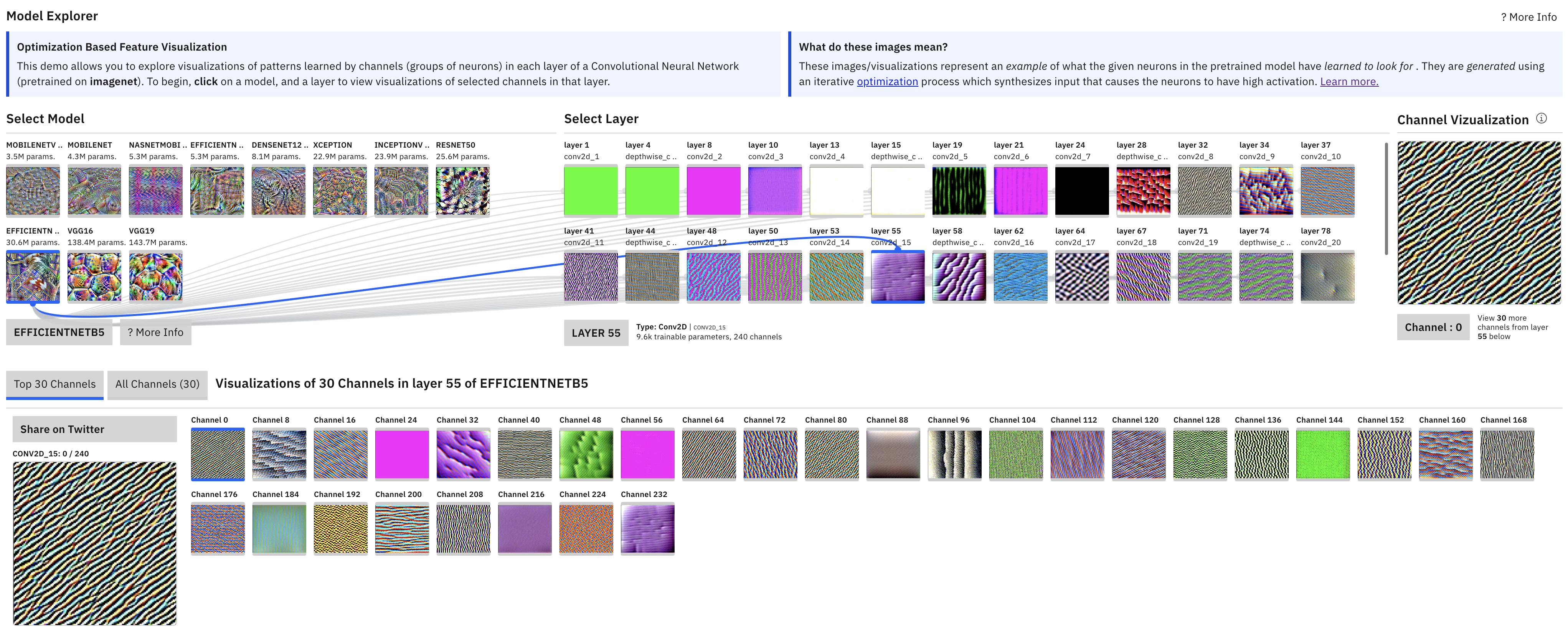

Figure 6: Visualization of EfficientNet-B5 architecture.

This Rust program demonstrates how to load and run an EfficientNet model for image classification using the candle deep learning framework. The model variants range from EfficientNet-B0 to EfficientNet-B7, each offering different trade-offs between computational efficiency and model accuracy. The program supports loading pre-trained model weights from the Hugging Face Hub or from a local file if specified by the user. The input image, typically of size 224x224 pixels, is loaded, pre-processed, and passed through the selected EfficientNet model to predict the top 5 classes along with their probabilities. The program outputs the predictions in a human-readable format, showing the class names and their corresponding confidence scores.

//! EfficientNet implementation.

//!

//! https://arxiv.org/abs/1905.11946

use candle_core::{DType, IndexOp, D};

use candle_nn::{Module, VarBuilder};

use candle_transformers::models::efficientnet::{EfficientNet, MBConvConfig};

use clap::{Parser, ValueEnum};

use hf_hub::api::sync::Api; // Ensure we use the correct API for model downloading

#[derive(Clone, Copy, Debug, ValueEnum)]

enum Which {

B0,

B1,

B2,

B3,

B4,

B5,

B6,

B7,

}

#[derive(Parser)]

struct Args {

#[arg(long)]

model: Option<String>,

#[arg(long)]

image: String,

/// Run on CPU rather than on GPU.

#[arg(long)]

cpu: bool,

/// Variant of the model to use.

#[arg(value_enum, long, default_value_t = Which::B2)]

which: Which,

}

pub fn main() -> anyhow::Result<()> {

let args = Args::parse();

// Select device based on whether CPU or GPU is requested

let device = candle_examples::device(args.cpu)?;

// Load and prepare the image for the model (224x224 for EfficientNet)

let image = candle_examples::imagenet::load_image224(args.image)?.to_device(&device)?;

println!("Loaded image: {image:?}");

// Download the model if not specified

let model_file = match args.model {

None => {

let api = Api::new()?;

let api = api.model("lmz/candle-efficientnet".into());

let filename = match args.which {

Which::B0 => "efficientnet-b0.safetensors",

Which::B1 => "efficientnet-b1.safetensors",

Which::B2 => "efficientnet-b2.safetensors",

Which::B3 => "efficientnet-b3.safetensors",

Which::B4 => "efficientnet-b4.safetensors",

Which::B5 => "efficientnet-b5.safetensors",

Which::B6 => "efficientnet-b6.safetensors",

Which::B7 => "efficientnet-b7.safetensors",

};

api.get(filename)?

}

Some(model) => model.into(),

};

// Load the model weights into memory

let vb = unsafe { VarBuilder::from_mmaped_safetensors(&[model_file], DType::F32, &device)? };

// Set up the EfficientNet configuration based on the selected variant

let cfg = match args.which {

Which::B0 => MBConvConfig::b0(),

Which::B1 => MBConvConfig::b1(),

Which::B2 => MBConvConfig::b2(),

Which::B3 => MBConvConfig::b3(),

Which::B4 => MBConvConfig::b4(),

Which::B5 => MBConvConfig::b5(),

Which::B6 => MBConvConfig::b6(),

Which::B7 => MBConvConfig::b7(),

};

// Instantiate the EfficientNet model

let model = EfficientNet::new(vb, cfg, candle_examples::imagenet::CLASS_COUNT as usize)?;

println!("Model built successfully");

// Run inference on the input image